Lead scoring is one of those ideas everyone claims to have, but very few companies ever turn into something real. In fintech, the gap is even wider. When I joined the organization, qualification was almost entirely manual. BD and sales were reviewing websites one by one, interpreting business descriptions, searching for inconsistencies, guessing at risk signals, and making judgment calls about whether a lead was even worth calling. It wasn’t a skills issue. It was simply a process built around human interpretation — and interpretation doesn’t scale.

My first realization was that we didn’t need a “scoring model” at all. We needed an interpretive system. Something that could read context the way a person would: understand what a business actually does, spot contradictions, fill in missing details, and present a conclusion that matched how BD and sales already made decisions. The goal wasn’t to replace human judgment. The goal was to give teams a head start, so the time they spent researching could instead be spent selling.

Fintech qualification is a surprisingly tangled problem. A single lead can carry regulatory considerations, restricted categories, onboarding differences between regions, and unclear business models that only reveal themselves once you analyze the website or dig into public data. Two leads might look identical inside the CRM but behave completely differently in the real world. Before automation, this complexity meant slow responses, inconsistent routing, and ongoing debates about what “qualified” meant in North America versus Europe versus Southeast Asia. A simple numeric score wouldn’t fix that. What we needed was context at scale.

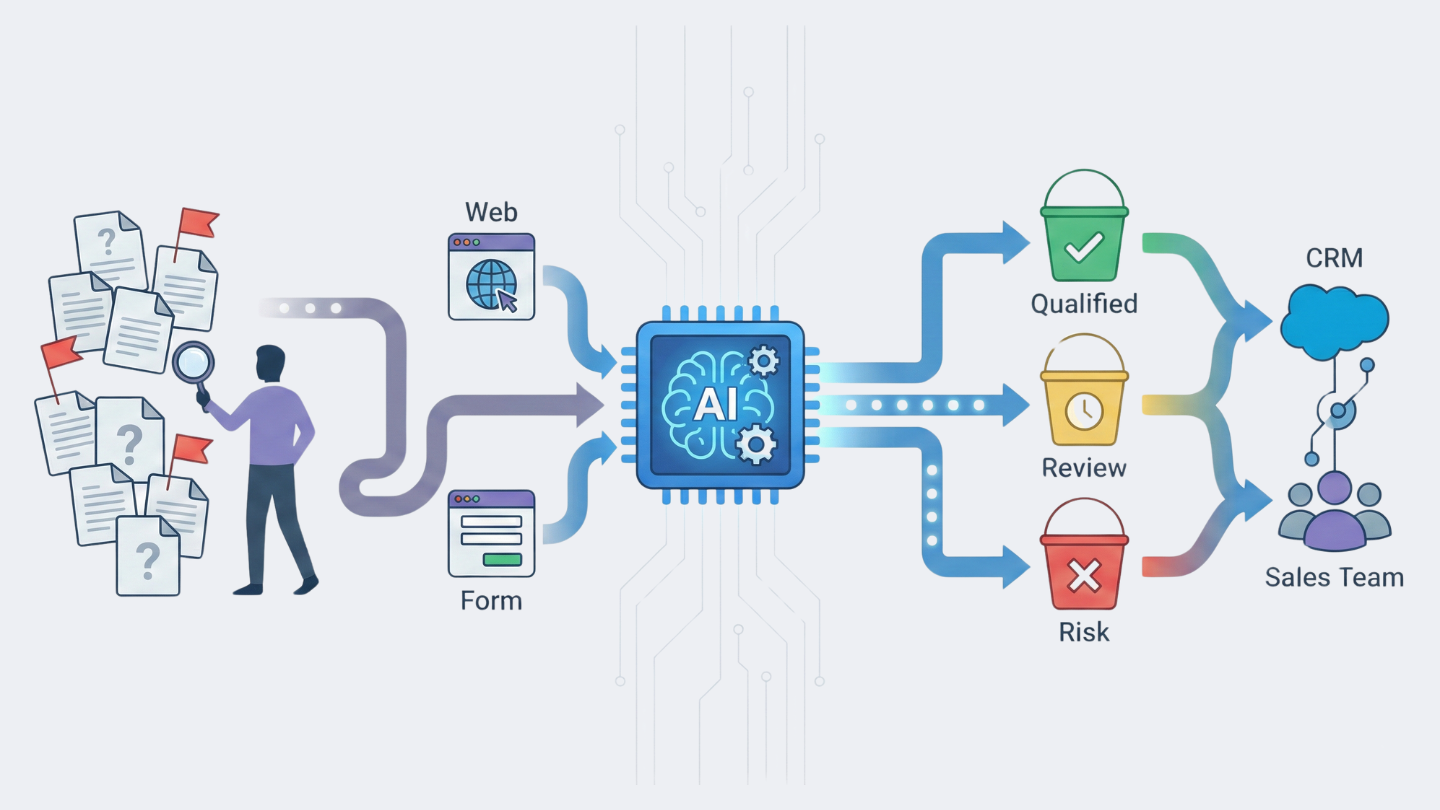

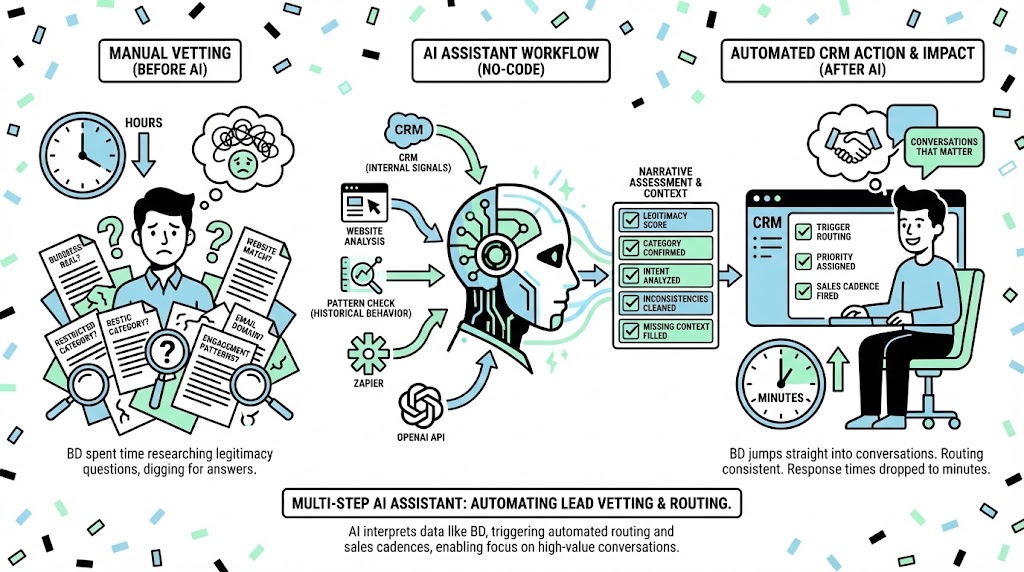

So I started by mapping out what BD and sales were actually trying to figure out during manual vetting. Was the business real? Did the website match the information in the form? Did the category fall into a restricted or high-risk bucket? Was the email domain a real company or a personal account? Did the engagement patterns show real intent? Once I saw the common threads, the job became clearer: the AI needed to see what they saw, and interpret it the way they interpreted it.

Because we had no engineering resources for this, everything had to be built with the tools we already used. I connected OpenAI’s API through Zapier, layered logic between Salesforce, HubSpot, and SalesLoft, and fed in signals from our internal CRM. What emerged was a multi-step assistant: a lead comes in, the AI analyzes the description and website, cleans up inconsistencies, fills in missing context, checks patterns from historical behavior, and sends back a narrative assessment. The CRM then uses that assessment to trigger routing, prioritization, and the correct sales cadence — all automatically.

The biggest impact wasn’t technical but psychological. Before the assistant, BD spent time researching legitimacy questions that didn’t actually move deals forward. After the assistant, those questions were already answered before they ever touched the lead. BD no longer had to dig; they could jump straight into conversations that mattered. Response times dropped from hours to minutes. Routing became consistent across regions. Sales cadences fired immediately. And for the first time, everyone had the same definition of lead quality.

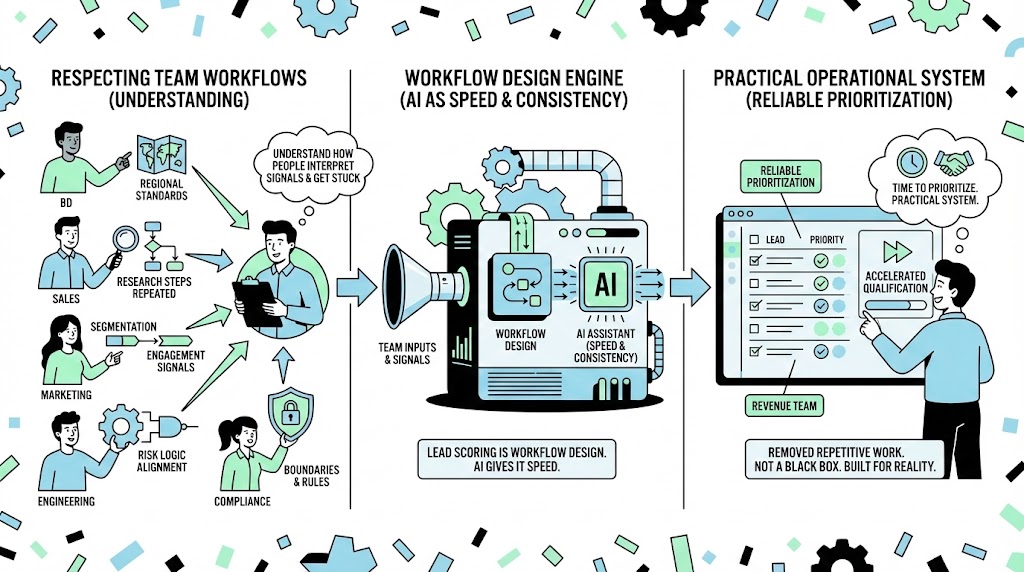

The truth is, the system didn’t succeed because of AI. It succeeded because it respected how each team actually worked. I sat with BD to understand their regional standards. I talked with sales about the research steps they repeated every single day. I worked with marketing to connect segmentation and engagement signals. I coordinated with engineering to align AI outputs with risk logic. And I made sure compliance was comfortable with every boundary and rule built into the system.

What I learned is that lead scoring is not really about scoring. It’s about workflow design. It’s about understanding how people interpret signals, where they get stuck, and what slows them down. AI just gives that workflow more speed and consistency.

The result was an assistant that removed repetitive work, accelerated qualification, and finally gave revenue teams a reliable way to prioritize their time. It wasn’t a black box. It wasn’t a magic answer generator. It was a practical, operational system built for the messy reality of fintech — and that is precisely why it worked.