One of the first things I realized after joining the team was that we were drowning in repetitive reporting work. Every month (and later every week), we’d receive a raw Excel export from HQ. It arrived as a giant attachment, full of inconsistent column names, mixed formats, missing values, and plenty of PII we couldn’t pass into any external tool. Useful data, yes — but not in a form anyone actually wanted to work with.

And like most teams, we did the same thing everyone does: open the file, clean it manually, merge a few sheets, delete a few columns, fix formatting, recalc metrics, prepare summaries, share them in Slack, and then do the whole thing again tomorrow. It wasn’t “hard,” but it was repetitive enough that you could feel the time slipping away. Fifteen minutes a day isn’t much until you multiply it across months — and across multiple people.

That’s when I started thinking about whether a lightweight automation layer plus a custom GPT could take over the mechanical parts of the workflow. At that time, we didn’t have agents, we didn’t have built-in pipelines, and our engineering bandwidth was basically nonexistent. So whatever I built had to be no-code, safe for compliance, and simple enough to maintain without rewriting scripts every week.

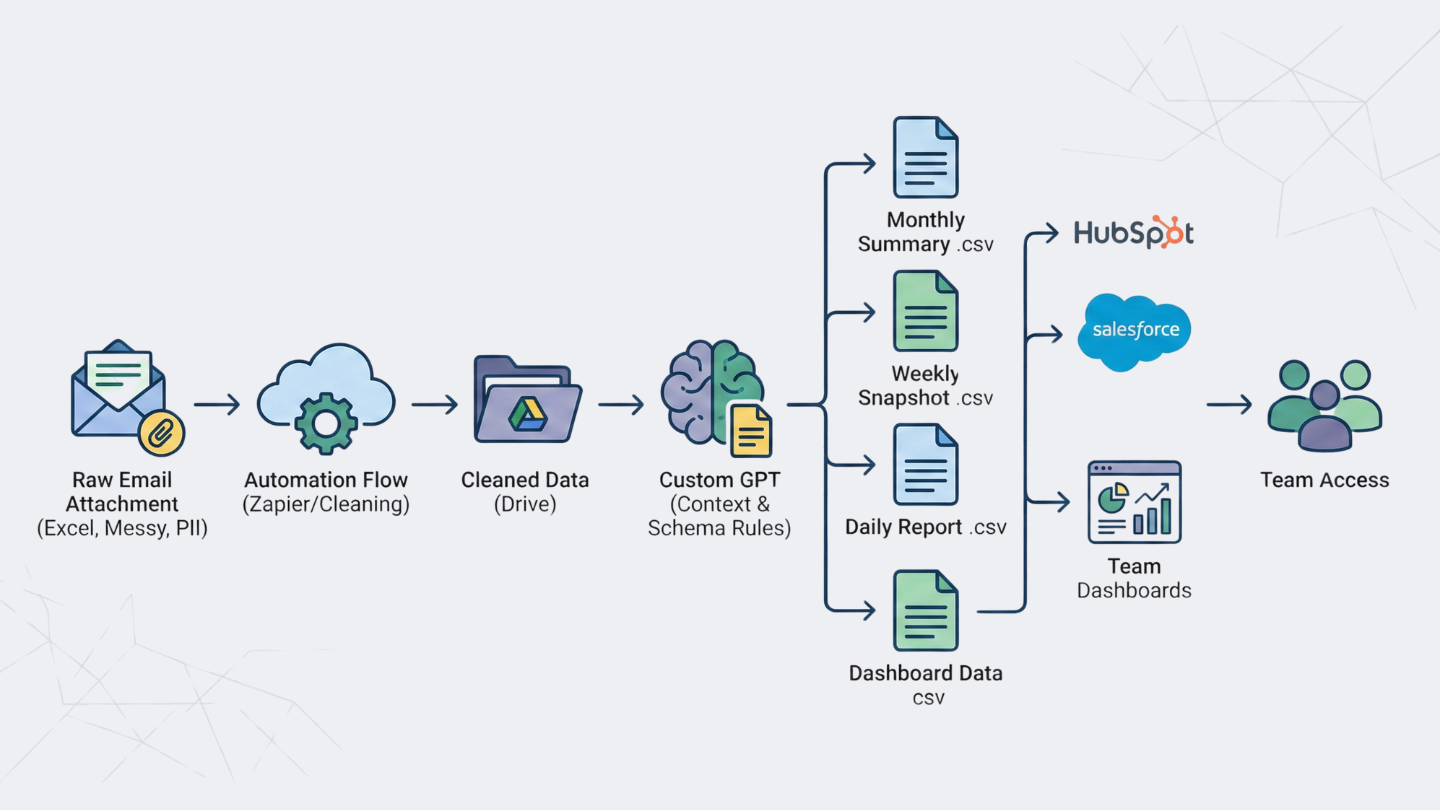

The first step was cleaning the data before it ever touched a language model. That meant building a Zapier flow that watched for specific inbound report emails, pulled the attachment, dropped it into a protected Drive folder, and then ran a cleaning process. Most of the work wasn’t “automation” in the fancy sense — it was renaming columns, normalizing values, stripping out sensitive fields, and reconciling multiple sheets that didn’t even agree on how to spell “KYC”. But once that cleanup flow existed, the whole operation immediately felt lighter. Every report came in clean, consistent, and predictable.

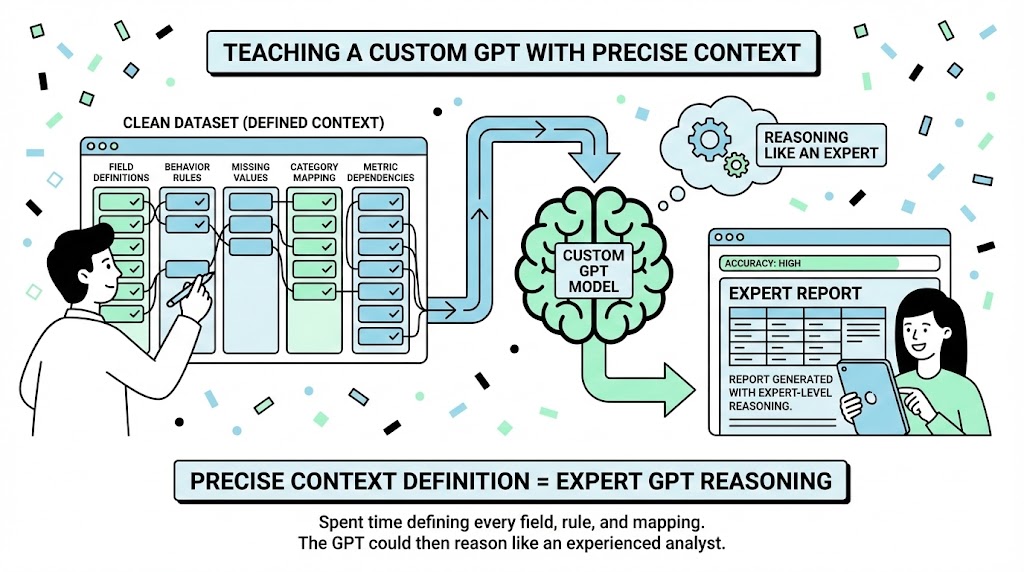

With a clean dataset in hand, the next challenge was teaching a custom GPT how to interpret it. A model can only be as reliable as the context you give it, so I spent a good chunk of time defining every field: what it meant, how it should behave, what counted as a missing value, how certain categories mapped together, and how one metric depended on another. It wasn’t complicated work — just very precise. The payoff was that the GPT could reason like someone who had been doing these reports for years.

Once it understood the schema, the rest became almost fun. I fed it the cleaned file and asked it to generate a fixed set of outputs: the monthly acquisition summary, weekly performance snapshots, regional breakdowns, channel-level conversions, and several internal marketing dashboards. Eight different reports in total. All of them consistent. All of them formatted in CSV. All of them ready to upload into HubSpot or merge into Salesforce dashboards without any extra work.

From there, the idea naturally expanded into daily summaries. The GPT already understood the dataset and the business logic, so breaking the monthly framework into weekly and daily versions was straightforward. The model would return the analysis in a couple of minutes, and I could hand it to the team immediately. No more manual filtering, pivot tables, or “fixing” the export before we could do anything useful with it.

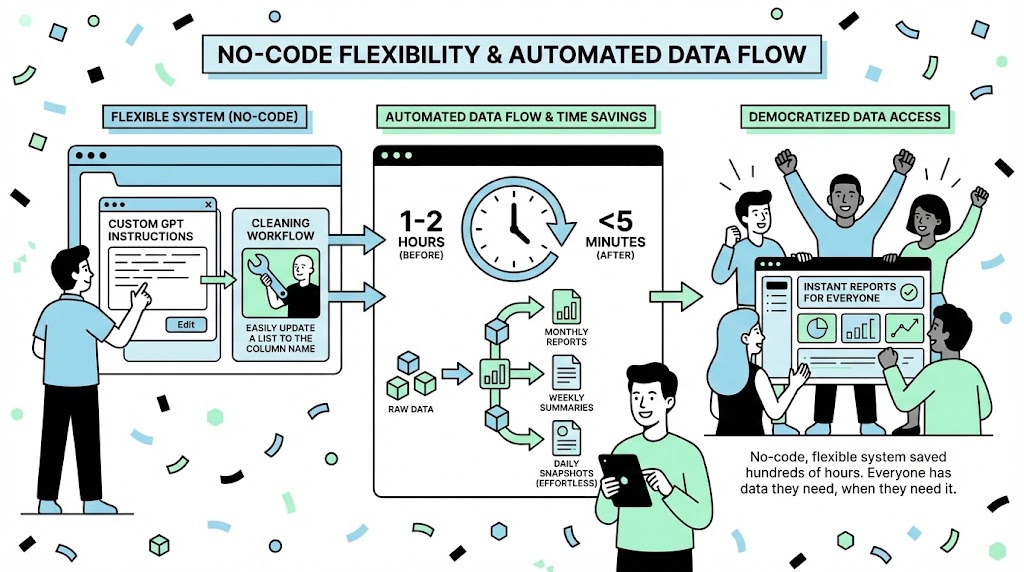

The interesting part is that none of this required real coding. I didn’t need to write Python, maintain scripts, or ask engineering for a favor every time a field changed. If something shifted in the business logic, I just updated the custom GPT’s instructions. If HQ changed a column name, I fixed it in the cleaning workflow. It stayed flexible, which was important because our data infrastructure wasn’t exactly stable.

The time savings added up fast. Monthly reports that used to take one to two hours dropped to under five minutes. Weekly summaries became almost trivial. And daily snapshots — something we never had time to produce before — became effortless. Over a full year, the system probably saved me more hours than I care to admit. But the bigger value was that everyone finally had the data they needed, when they needed it, without waiting for someone to “pull the report real quick.”

It also made the work more shareable. I could give the custom GPT to teammates who weren’t technical at all. They uploaded a cleaned file, got standardized outputs, and didn’t have to think about formulas or formatting. It democratized reporting in a way that felt more “real” than any BI tool we had at the time.

Looking back, the whole project was simple. Not simple in effort, but simple in philosophy: if a task repeats every day, a machine should do it. The GPT didn’t replace analysts or decision-making — it just removed the parts of the job nobody wants to spend their mornings on.

That alone made it worth building.