My involvement in the development of Aitracker was deeply rooted in solving the company challenges we were facing in performance marketing at scale. The vision was clear—we needed an automated system that could track, optimize, and scale campaigns more efficiently and we can share the learning and strategy in a very efficient way.

Sprint by Sprint:

The development process was structured around agile methodology, where we broke the project into numerous biweekly sprints, each focusing on specific functions or problem areas that needed to be addressed. I worked closely with the CEO and engineering teams in China to design the logic behind core features such as A/B testing automation, budget scaling, and performance tagging.

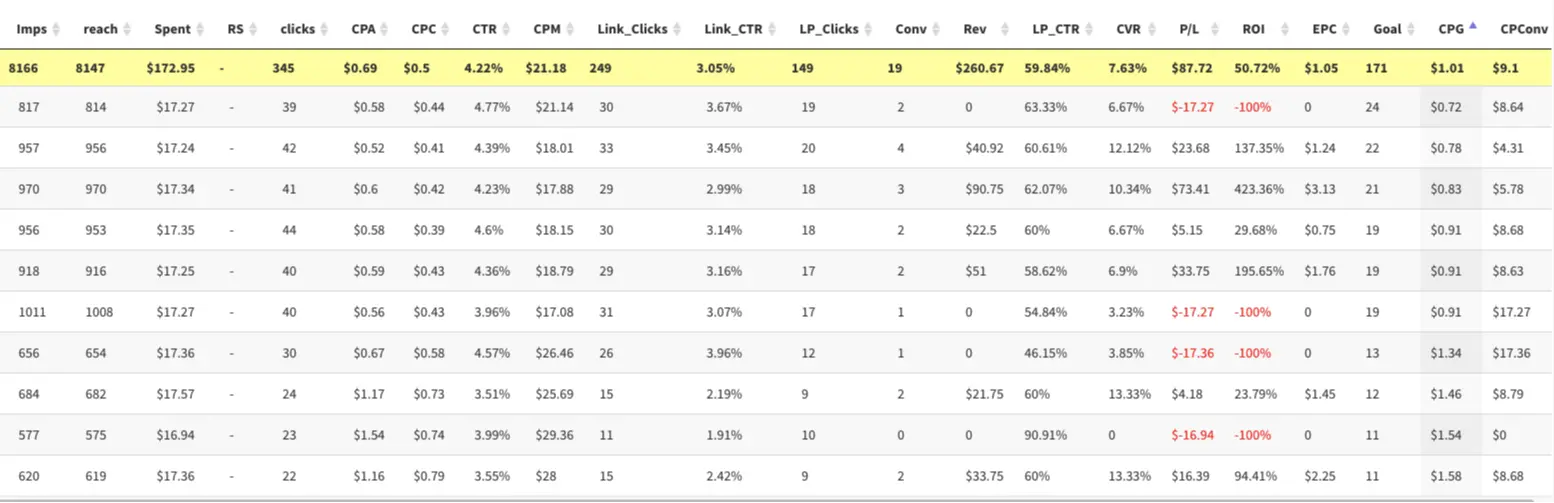

Each sprint was an opportunity for me to test features, and refine our approach based on real campaign data. For example, in the early version, I struggled with finding the right approach designing rule-based budget adjustments for Facebook campaigns. We tested multiple functions—varying budget increase thresholds, different duplication strategies, and scaling rules—which in the end allow the us to quickly apply right rule combo that lead campaigns to grow quickly and maintain ROI. These kind of tests were not always successful at first, but every failure was an opportunity to learn, adjust, and improve.

Challenges and Iterating on Solutions

We encountered several roadblocks that required creative problem-solving and relentless testing. One such challenge was incorporating dynamic lead selling prices into the automated decision-making process (We do hot lead transfer and leads were sold in auction).

Initially, our database could not record and merge the data quickly enough to fluctuating CPA and live selling price from the auction platform, which affected the automated decision making. To solve this, We tested multiple solution and I found a solution to utilize Tableau and a 3rd party data processing tool to process the data separately and merge the clean data into Aitracker to eventually solve the issue.

Another challenge was ensuring that the system’s automation didn’t result in over scaling exceed buyer's maximum capacity and make sure the company has a health cashflow (Each of our buyers has different payment terms and buying capacity and these often change over time).

On one hand, I ran tests with different budget caps, scaling frequencies, and geographic targeting adjustments to ensure we had all the settings and rules in place. On the other hand, I worked with developers to integrate a buyer management system so we could manage each buyer's purchasing capacity and payment terms in a single platform.

Final Product

After 3 months of collaboration, continuous testing, and refinement, we finally had a home grown product that transformed the way we managed campaigns. It became a core asset in our marketing toolkit, drastically improving our efficiency and scalability. The iterative development process was challenging but rewarding, and it taught me the importance of resilience, team collaboration, and data-driven optimization.

A Learning Journey in Performance Marketing Automation

Being part of Aitracker’s development process was more than just about building a tool—it was about reshaping how our team approached performance marketing at scale. Through continuous testing, learning, and refinement, I helped create a platform that not only improved efficiency but also empowered our team to operate with greater precision and profitability.